Contents:

For this example, we’ll be using what’s called the logistic sigmoid function. We’ll give our inputs, which is either 0 or 1, and they both will be multiplied by the synaptic weight. We’ll adjust it until we get an accurate output each time, and we’re confident the neural network has learned the pattern. Activation used in our present model are “relu” for hidden layer and “sigmoid” for output layer.

Furthermore, we would expect the gradients to all approach zero. In larger networks the error can jump around quite erractically so often smoothing (e.g. EWMA) is used to see the decline. A single perceptron, therefore, cannot separate our XOR gate because it can only draw one straight line.

Also, the loss function discerns heavy deviation as predicted and desired values of the πt-neuron model. Further, to assess the applicability and generalization of our proposed single neuron model, we have varied the input dimension and no. of input samples in training the proposed model. We have considered three different cases having 103, 104, and 106 samples in the dataset, respectively. Results show that the loss depends upon the no. of samples in the dataset. Yadav et al. have also used a single multiplicative neuron model for time series prediction problems .

Xor Dataset

We will use 16 neurons and ReLu as an activation function for this layer. The input for the logic gate consists of two values . T is for true and F for false, similar to binary values . Input is fed to the neural network in the form of a matrix. So we have to define the input and output matrix’s dimension (a.k.a. shape). X’s shape will be because one input set has two values, and the shape of Y will be .

We are also using supervised learning approach to solve X-OR using neural network. I was trying to implement an XOR gate with tensorflow. I succeeded in implementing that, but i don’t fully understand why it works. So both with one hot true and without one hot true outputs. Here is the network as i understood, in order to set things clear.

Change in the outer layer weightsNote that for Xo is nothing but the output from the hidden layer nodes. A xor dataset is a set of data points that are exclusive to one another. This means that each data point can only belong to one class, and no two data points can be in the same class. This type of dataset is often used in machine learning and data mining applications, where it is important to be able to distinguish between different classes of data.

The xor neural network of -2 from the hidden neuron to the output one insures that the output neuron will not come on when both input neurons are on (ref. 2). Wang, “Dendritic neuron model with effective learning algorithms for classification, approximation, and prediction,” IEEE Transactions on Neural Networks and Learning Systems, vol. For learning to happen, we need to train our model with sample input/output pairs, such learning is called supervised learning.

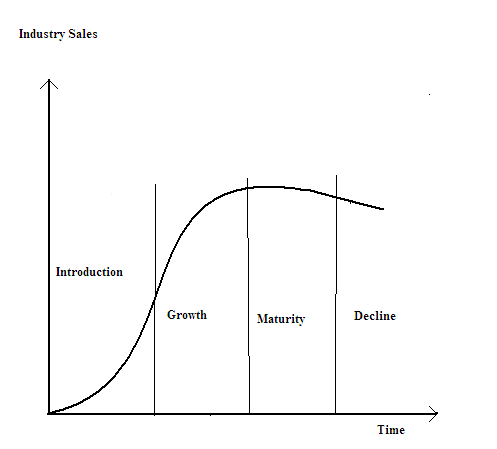

Based on the problem at hand we expect different kinds of output e.g. for cat recognition task we expect system to output Yes or No for cat or not cat respectively. Such problems are said to be two class classification problem. Some advanced tasks like language translation, text summary generation have complex output space which we will not consider in this article. The activation function in output layer is selected based on the output space. For a binary classification task sigmoid activations is correct choice while for multi class classification softmax is the most populary choice.

Forward Propagation

It will make network symmetric and thus the neural network looses it’s advantages of being able to map non linearity and behaves much like a linear model. The next step is to define a Neural Network that is able to solve the XOR problem. As mentioned above, the XOR function cannot be linearly separated by one boundaryline. This is also the reason why the function cannot be solved by a simple Perceptron.

A problem is linearly separable if only one hyperplane can make the decision boundary. L1 loss obtained in these three experiments for the πt-neuron model, and the proposed model is provided in Table 3. This loss function is only used to visualize the comparison in the model. As mentioned earlier, we have used the binary cross-entropy loss function to train our model. One of the main problems historically with neural networks were that the gradients became too small too quickly as the network grew.

Training algorithm

Therefore, it introduces an extra negative sign for the even number of input bits to maintain the input combinations belonging to the same class. Mathematically, Iyoda et al. have shown the capability of the model for solving the logical XOR and N-bit parity problems for ∀ N≥ 1. However, this model also has a similar issue in training for higher-order inputs. The XOR problem with neural networks can be solved by using Multi-Layer Perceptrons or a neural network architecture with an input layer, hidden layer, and output layer. So during the forward propagation through the neural networks, the weights get updated to the corresponding layers and the XOR logic gets executed.

Further, we have compared the training performance of the πt-neuron model with our proposed model for the 10-bit parity problem. Training results of both models have been represented in Figure 7 (by plotting binary cross-entropy loss versus the number of iterations). The enhanced πt-neuron is based on the multiplicative neuron model. The multiplicative model suffers from a class reversal problem.

Furthermore, these areas represent respective classes simply by their sign (i.e., negative area corresponds to class 1, positive area corresponds to class 2). The Iris dataset is best for understanding which features are important to predict the flower species. Every machine learning or neural network curriculum takes this dataset as a reference to teach model building.

Summarised neural network training process

After training the model, we will calculate the accuracy score and print the predicted output on the test data. The learning rate determines how much weight and bias will be changed after every iteration so that the loss will be minimized, and we have set it to 0.1. We have defined the getORdata function for fetching inputs and outputs. Similarly, we can define getANDdata and getXORdata functions using the same set of inputs. In some practical cases e.g. when collecting product reviews online for various parameters and if the parameters are optional fields we may get some missing input values.

They are initialized to some random value or set to 0 and updated as the training progresses. The bias is analogous to a weight independent of any input node. Basically, it makes the model more flexible, since you can “move” the activation function around. M maps the internal representation to the output scalar.

A pretraining domain decomposition method using artificial neural … – Nature.com

A pretraining domain decomposition method using artificial neural ….

Posted: Wed, 17 Aug 2022 07:00:00 GMT [source]

Remember the linear activation function we used on the output node of our perceptron model? You may have heard of the sigmoid and the tanh functions, which are some of the most popular non-linear activation functions. The most important thing to remember from this example is the points didn’t move the same way . That effect is what we call “non linear” and that’s very important to neural networks. Some paragraphs above I explained why applying linear functions several times would get us nowhere. Visually what’s happening is the matrix multiplications are moving everybody sorta the same way .

A neural network is a machine learning algorithm that is used to model complex patterns in data. One of the most popular applications of neural networks is to solve the XOR problem. The XOR problem is a classic example of a problem that is not linearly separable. This means that there is no straight line that can be drawn to separate the two classes of data. Neural networks are well suited to solving this type of problem because they can learn to recognize patterns that are not linearly separable.

More from Towards Data Science

As mentioned earlier, we have measured the performance for the N-bit parity problem by randomly varying the input dimension from 2 to 25. L1 loss function has been considered to visualize the deviations in the predicted and desired values in each case. The proposed model has shown much smaller loss values than that of with πt-neuron model.

- This is also indicating the training issue in the case of higher dimensional inputs.

- Such problems are said to be two class classification problem.

- An artificial neural network is made of layers, and a layer is made of many perceptrons .

- The first function will be a constructor to initialize the parameters like learning rate, epochs, weight, and bias.

https://forexhero.info/ networks are complex to code compared to machine learning models. If we compile the whole code of a single-layer perceptron, it will exceed 100 lines. To reduce the efforts and increase the efficiency of code, we will take the help of Keras, an open-source python library built on top of TensorFlow. As we can see, the Perceptron predicted the correct output for logical OR. Similarly, we can train our Perceptron to predict for AND and XOR operators.

The basics of neural networks

Its differentiable, so it allows us to comfortably perform backpropagation to improve our model. To bring everything together, we create a simple Perceptron class with the functions we just discussed. We have some instance variables like the training data, the target, the number of input nodes and the learning rate.

Therefore, our proposed model has overcome the limitations of the previous πt-neuron model. It is observed by the results of Tables 5 and 6 that the πt-neuron model has a problem in learning highly dense XOR data distribution. However, the proposed neuron model has shown accurate classification results in each of these cases.

We can see that two boundarylines are needed to solve the problem. Polaris000/BlogCode/xorperceptron.ipynb The sample code from this post can be found here. Let’s bring everything together by creating an MLP class. All the functions we just discussed are placed in it. The plot function is exactly the same as the one in the Perceptron class.

In the forward pass, we apply the wX + b relation multiple times, and applying a sigmoid function after each call. Though the output generation process is a direct extension of that of the perceptron, updating weights isn’t so straightforward. Hidden layers are those layers with nodes other than the input and output nodes. In any iteration — whether testing or training — these nodes are passed the input from our data.

Backpropagation in a Neural Network: Explained – Built In

Backpropagation in a Neural Network: Explained.

Posted: Mon, 31 Oct 2022 07:00:00 GMT [source]

Following images show no matter how many ways we draw a line in 2D space we cannot differentiate one side’s output with the other. For example for the first one and both inputs makes XOR to give 1. But for the input the output is 0 but we cannot make it separated and unfortunately they are falling in the same side. Shen, “Data-driven time series prediction based on multiplicative neuron model artificial neuron network,” Applied Soft Computing, vol. Tessellation surface formed by πt-neuron model and proposed model for two-dimensional input.

Reconfigurable electro-optical logic gates using a 2-layer multilayer … – Nature.com

Reconfigurable electro-optical logic gates using a 2-layer multilayer ….

Posted: Sat, 20 Aug 2022 07:00:00 GMT [source]

In fact so small so quickly that the change in a deep parameter value causes such a small change in the output that it either gets lost in machine noise. Or, in the case of probabilistic models, lost in dataset noise. The overall components of an MLP like input and output nodes, activation function and weights and biases are the same as those we just discussed in a perceptron. Now coming to the question, XOR is not linearly separable. Hence we cannot directly solve XOR problem with two neurons.